When AI Doesn't Sound Like Us: Evaluation Rubrics and Iteration Strategies

We've built our style spec. We've set up Claude or ChatGPT with clear rules. These cover sentence length, word choices, and rhetorical patterns. We make our first piece of content.

It's.. not quite right. It's better than before. The sentence lengths are closer. The words feel more like ours. But something's still off. The rhythm doesn't flow right. The tone swings between spots that feel like us and spots that feel like a bland blog post.

This is normal. The spec is just the start. The real work is testing and refining.

This article shows a step-by-step way to check AI output against our style. We'll learn to spot common failures. Then we'll fix our spec until AI content needs light edits, not full rewrites.

Building an Evaluation Rubric

Most writers judge AI output with one question: "Does this sound good?" That's the wrong question. "Good" is vague. It shifts with mood, context, and caffeine. The right question is: "Does this match our patterns?"

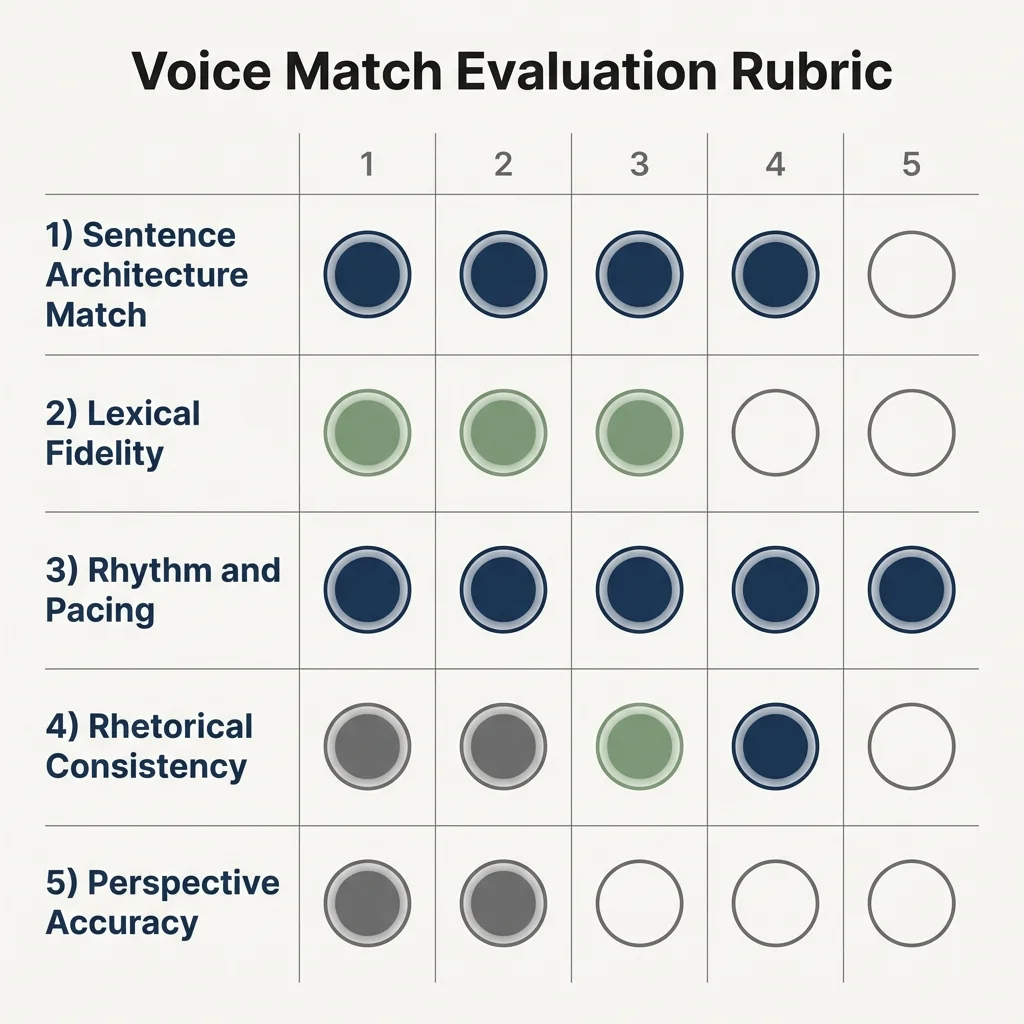

The five-point rubric turns vague feelings into scores. Each area maps to the stylometry traits from Article 2. This makes the link between analysis and grading clear.

The Five-Point Voice Evaluation

1. Sentence Architecture Match (1-5)

Does the average sentence length match our spec? If it calls for 18-word averages, count a sample. Is sentence variety like ours? Check the mix of simple, compound, and complex forms. Are fragments used the way we use them, for emphasis at key moments? Or are they scattered at random?

2. Lexical Fidelity (1-5)

Did the AI use our preferred words? Check for the terms in our spec. Did it avoid our "never use" list? Search for jargon or overused words we flagged. Is the contraction rate right? If we write "don't" and "we're" in our prose, the AI should too.

3. Rhythm and Pacing (1-5)

Do paragraph lengths match ours? Some writers like short, punchy chunks. Others build longer blocks. Is the punctuation right? If we use em-dashes often, the output should too. If we skip semicolons, none should appear. Does the piece "breathe" like ours? Look for pace shifts, fast parts, and pauses.

4. Rhetorical Alignment (1-5)

Are ideas brought in the way we'd do it? Some writers lead with examples. Others start with a definition or a question. Is evidence used in our style? Check if it's woven in or cited formally. Are banned patterns gone? If we said "never open with a question," confirm the AI obeyed.

5. Stance Consistency (1-5)

Is the person usage right throughout? If our spec calls for "we," there should be no shifts to "one" or passive voice. Is the hedging level correct? Some writers hedge often ("tends to," "may"). Others make direct claims. Does it sound like us? Or does it sound like a generic writer talking to a generic crowd?

Scoring Interpretation

Common Failure Patterns and Fixes

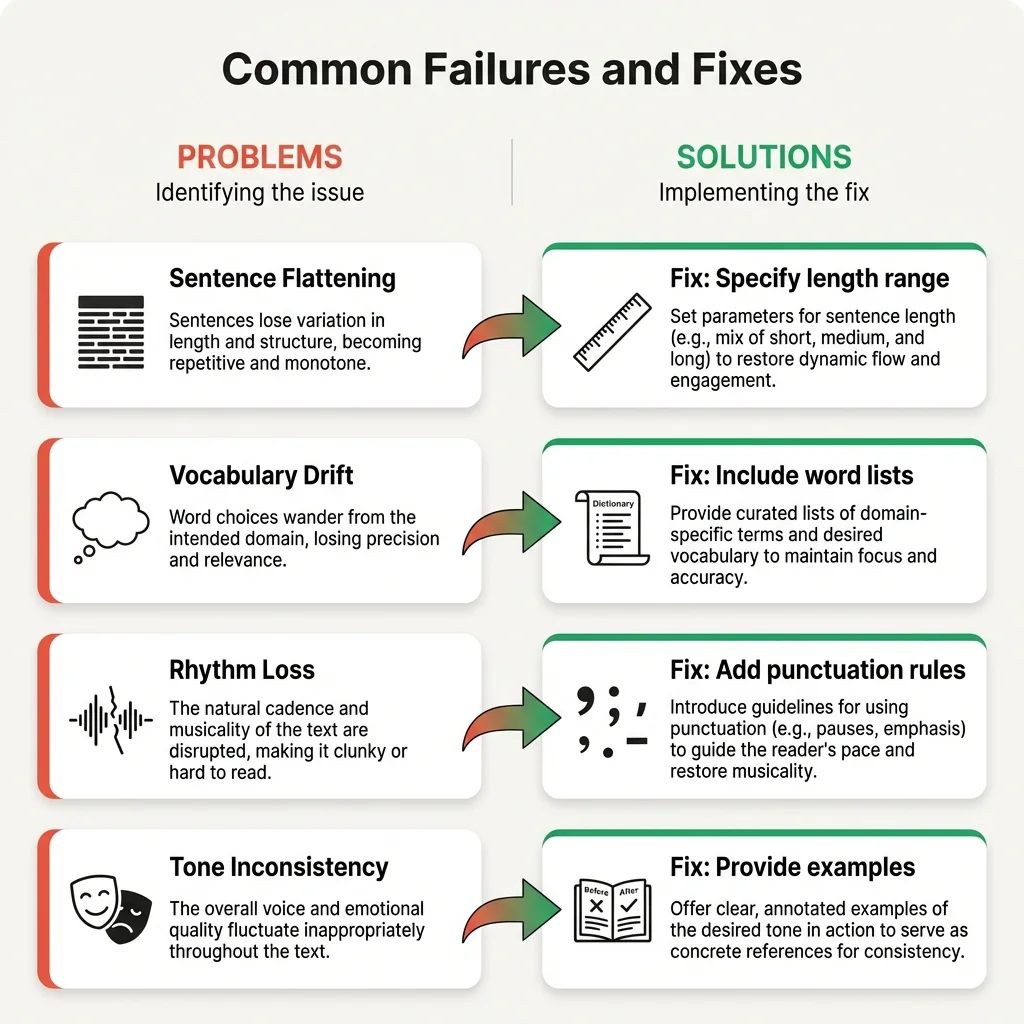

When AI output scores low on a given area, the cause often traces to how the spec was written. These five patterns show up again and again.

Pattern 1: The Vocabulary Slip

Symptom: The AI uses words from our "avoid" list. Or it skips our preferred terms. We said "use 'show'" but "show" appears three times.

Diagnosis: Word guidance is buried in the spec. The AI saw it but didn't give it enough weight.

Fix: Move word lists to the top of the spec. Add stress: "CRITICAL: Never use these words." Where we place the rule affects how the AI ranks it.

Pattern 2: The Rhythm Drift

Symptom: Output sounds flat. Sentences are all the same length. Paragraphs march in step. The variety that makes prose feel alive is gone.

Diagnosis: The AI fell back on safe, uniform forms. Our spec named averages but didn't stress variety.

Fix: Add sample text that shows range. Give clear ranges: "Sentences run from 8 to 35 words, with an average near 18. Short ones should follow long ones for contrast." Show, don't just tell.

Pattern 3: The Tone Mismatch

Symptom: The output swings between too formal and too casual. Or it stays in the wrong register. We said "warm but smart" and got "corporate with odd slang."

Diagnosis: The voice section was too vague. "Warm but smart" means different things to each reader and to each AI.

Fix: Add concrete tone examples. Show a sentence that's too formal. Then the same idea at our real level. Then too casual. This teaches the AI where we sit on the spectrum.

Pattern 4: The Structure Override

Symptom: We wanted a certain opening. But the AI forced its own intro-body-end format. We said "start with an example" and got "In today's fast-paced world.."

Diagnosis: The AI's default training is overriding our spec. It has strong habits about how articles should begin.

Fix: Be blunt about structure. Prompt section by section rather than asking for a full piece. For openings, write the first line ourselves and ask the AI to continue.

Pattern 5: The Context Collapse

Symptom: Voice holds up on our usual topics. But it breaks when the subject shifts. Our tech writing sounds like us; our finance writing sounds bland.

Diagnosis: Our spec was built on samples from one subject. The patterns don't carry over to new topics.

Fix: Add samples from different topics to our spec. Build topic-based variants if needed. Use a base spec plus tweaks for technical, narrative, or persuasive content.

The Iteration Loop

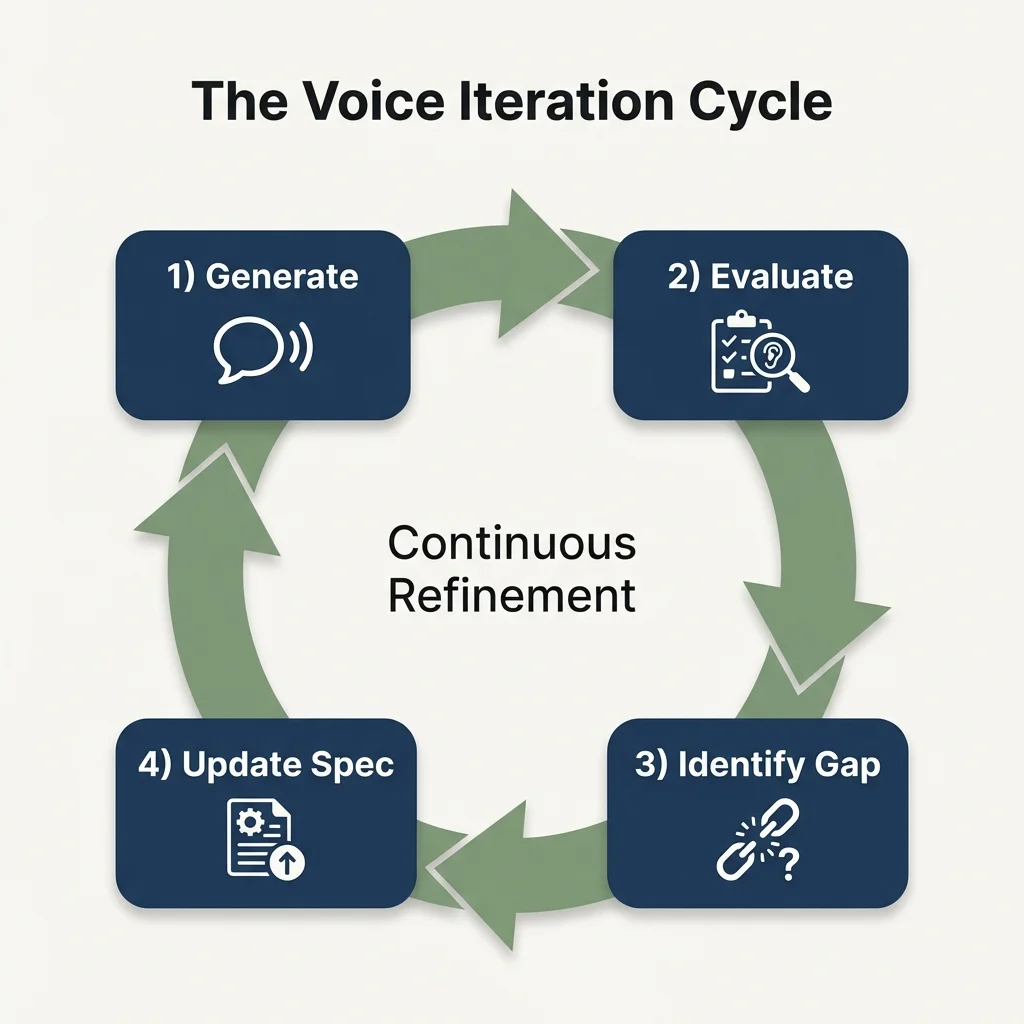

Improving AI output isn't a one-time setup. It's a cycle. We generate, evaluate, diagnose, and refine.

The key rule: fix one area at a time. Don't try to fix it all at once. If sentence structure scored 2 and word choice scored 3, fix structure first. Check that it helped. Then fix words.

This focused method has two gains. First, we can tell what works. If we change five things at once, we can't tell which one helped. Second, we avoid overdoing it. Specs that try to control all things make AI output lose all flow.

Tracking Changes

Keep a log of what failed and what we changed. Note whether the change helped. After three to five rounds, patterns emerge. These become lasting additions to our spec. They're the tweaks that moved us from bland output to real voice match.

When to Stop Iterating

- Rubric scores consistently hit 20 or above

- We can edit AI output to final draft in under fifteen minutes

- Output "feels like us" on first read, before we start analyzing

The goal isn't perfection. The goal is speed. When AI output needs light edits, not rewrites, we've hit real teamwork.

Maintaining Consistency Over Time

Style specs aren't fixed. Our writing evolves. Platform updates change how AI reads our rules. New topics and readers may need tweaks.

The Style Drift Problem

Three factors cause specifications to become stale:

Our voice evolves. The samples from six months ago may not match how we write today. Writers shift over time. We get more concise. We pick up new habits. We drop old verbal tics.

Platforms update. Claude and ChatGPT get regular updates. These can change how they read our rules. A spec that worked in March may give different results in September.

Context shifts. A new project, audience, or format may push our spec in ways it wasn't built to handle.

Quarterly Review Protocol

Every three months, run this diagnostic:

- Make three test pieces on different topics using our current specification

- Score each against the rubric

- Compare to our recent human writing, not old samples, but what we wrote this past month

- Update the spec if patterns have shifted

This catches drift early. A spec that scores 22 today might score 17 in six months if we don't maintain it.

Version Control for Specifications

Date each spec. Keep a log of what we changed and why. Keep separate specs for different uses if our writing varies by audience or format.

This isn't wasted effort. It's what keeps the tool working. Without it, the AI slowly drifts from our voice. And we wonder what went wrong.

The Complete Workflow

Across this series, we've built a step-by-step approach to AI writing that keeps our voice:

- See the problem (Article 1): Vague labels don't work as AI instructions

- Analyze our patterns (Article 2): Measure voice across five stylometry areas

- Build a spec (Article 3): Create a document that captures our patterns

- Use and set up (Article 3): Load the spec into Claude or ChatGPT

- Score the output (Article 4): Grade it against a rubric tied to our traits

- Iterate with purpose (Article 4): Refine the spec based on clear, diagnosed failures

- Maintain over time (Article 4): Run quarterly reviews and keep version control

The goal throughout: AI as a real co-writer, not costly autocomplete. First drafts that need edits, not rewrites. A voice that stays ours, even when a machine made the words.

Start Here

The system works, but only if we use it. Pick one recent piece of AI content. Score it on all five areas. Find the lowest score. Spot the failure pattern. Update the spec. Then regenerate and compare.

One round won't transform our AI work. But one round teaches us the cycle. After five rounds, we'll have a spec that gives us real output. After twenty, we'll wonder how we ever worked without one.

Our voice is measurable and teachable. The spec bridges our patterns and AI power. Scoring is quality control. Iteration is refining. Do the work, and the teamwork becomes truly useful.